*cross-posted on Culture Digitally*

I recently got a call from the CBC asking if I’ve heard about Crystal Knows and whether I’d be open to an interview. I quickly agreed. Little did they know that anyone I bump into these days will have trouble getting me to stop talking about mobile apps! At the recent ICA Mobile preconference in sunny Puerto Rico, Amy Hasinoff (Assistant Professor, University of Colorado Denver) and I talked about our research on apps designed to prevent sexual violence – all 215 of them. We also threw in two speculative app designs, one utopic and one dystopic, to critically reflect on the broader relationship between social change and technological development. The aim of our research is to probe values and assumptions embedded in app design and my analysis of Crystal heads in the same direction.

If you have yet to come across this app, there is plenty written about it. The two main taglines for Crystal are:

The biggest improvement to email since spell-check.

Crystal is a new technology built upon an ancient principle: Communicate with empathy.

So what does it do? It mines data posted publicly on Facebook, Twitter, LinkedIn, Google and “other sources,” and then uses “proprietary personality detection technology” to determine how people communicate and would like you to communicate with them. Basically, Crystal automates the process of achieving instant cachet with someone you don’t know at all or don’t know very well … … yet, because Crystal will help you get there!

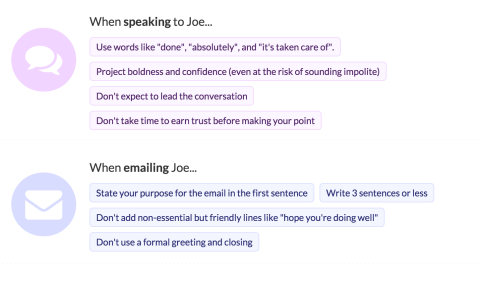

Here is an example of the type of specific advice that is offered for the communication style you should assume when communicating with ‘Joe’:

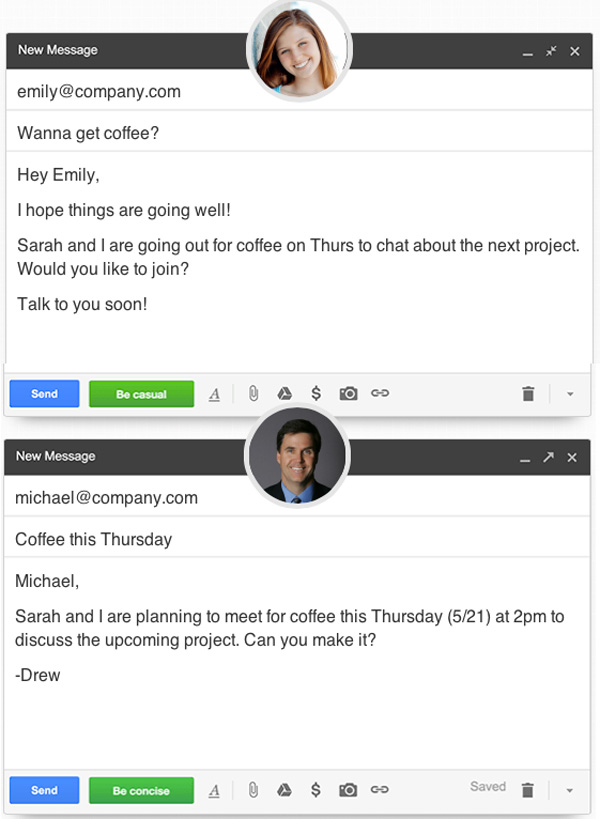

There is also a Google Chrome extension that integrates with Gmail, offering advice on the fly as you write your emails. Here are two examples that demonstrate different communication styles depending on whether you are writing to Emily – ‘be casual!’ – or Michael – ‘be concise!’

Some reviewers want the app to do better since it’s algorithmic conclusions are not always accurate (see this reddit thread for more examples of inaccuracies), while many have deemed Crystal “creepy” and a “stalking app.”

Public vs Private

Since the app is using publicly available data, people are quick to point out that if you don’t want your data mined, you shouldn’t make your data available in the first place. In other words: “Delete your Facebook account if you don’t want to be stalked!” Students who have been cyberbullied on social media have had to roll their eyes at similar remarks from their principals and teachers who seem to think that bullying begins and ends with one’s use of a platform. We can’t forget that many people feel a great deal of social pressure to participate in social media sites (and online generally) and would genuinely lose out socially if they quit.

Even if that doesn’t sway you, the overall argument relies on an understanding of public versus private that lacks nuance. At moments like this I tend to return to an old example from danah boyd (Principal Researcher at Microsoft Research). Recognizing that our assumptions and norms around privacy change over time, boyd’s research with teenagers informed us that if someone makes information publicly available in one context we can’t presume that they are okay with that information being taken and placed in another context, despite the fact that it was available for the taking. boyd offers an example of a police officer delivering a presentation about privacy to a group of teenagers at their high school. Appearing in the PowerPoint slide accompanying the presentation were Facebook profile images of students in the audience plucked from the site. Students were outraged! Not because they knew the police officer could access the images, just as anyone else could, but because they were taken out of context and put in another without their consent. Are these teens just misunderstanding the rules of privacy? Is there only one set of rules we ought to follow? Consider another example: Alex is a teenager with an old-school paper diary in his room. Alex knows that his parents could enter his room and find his diary while he is out of the house. Nevertheless, Alex does not expect that his parents will invade his privacy. All this to say:

Simply by participating online, can others assume we’ve given consent for any publicly available data we offer to be used by anyone, for any purposes, forever?

And this isn’t the end of the story for Crystal. Sure it mines publicly available data but it also mines data about you that others have provided and it is unclear how much use it makes of the more clearly demarcated private data. In the Privacy Policy – yes I look through these documents, along with Terms of Service, and I think we should all do more of it, especially with a critical eye – it is unclear how much “privileged information to which only you have access” is used. Crystal connects to your ‘Connected Accounts’ – in other words, people you have friended or followed, etc. By granting Crystal access to your social media accounts, you are granting access to this privileged information. The policy continues:

“In order to provide users briefings, we may need to process this data (only as listed in the “With Whom” section; however, the private data itself will never be displayed to any other Crystal user unless you explicitly ask us to).”

So let’s say I have a friend named Corrie on Facebook. I have access to Corrie’s posts but she has carefully crawled through the privacy settings offered by Facebook to ensure that no one but her ‘friends’ can access her posts. Presumably, then, I am giving access to Corrie’s posts (without her consent or knowledge) to Crystal so that I can then be briefed about her personality and communication likes and dislikes. Perhaps this is still ok as long as I and Crystal’s technology are the only ones who access it? Yet according to the policy I can then also display this information to other Crystal users if I explicitly ask them to do so? I have not joined Crystal so I may be misreading the policy, but such are the constraints of knowledge often embedded within privacy policies even when you do have access to the site.

There is also a slippery slope here that we should watch out for: has Crystal secured contracts with any of the major platforms it uses (Facebook, Twitter, LinkedIn, and Google)? What is the nature of these contracts or what might be the nature of any future contracts? At what point does Crystal begin collecting privately available data to perform better personality analyses? What about archived data stored by social media sites that you thought was deleted? For those who tinker with their online reputation management systems, how will they adjust given that they already have little control over data about them that is ‘publicly’ available, and now this data is also being scraped by new technologies like Crystal?

Black Box Algorithms

Another major unknown related to data usage by Crystal is summed up by the black box problem. Crystal is using “proprietary personality detection technology.” This means we don’t have access to what information is included for the algorithm’s calculations, how much weight is attributed to different data points, or even how many algorithms or processes are involved. Does Crystal access information about gender? What about race? What values and assumptions are baked into Crystal’s personality detection technology? We saw in the examples above that the female should be attended to casually in conversation while the male should be spoken to concisely. I know those are just examples likely selected by Crystal’s marketing team but they still raise flags about gendered assumptions that could be baked into these black box algorithms.

This technology is programmed to detect and scrape information about people of interest, understand it as data, process it through carefully tuned algorithms, and then extract some sort of ‘truth’ (or, personality characterization, along with a proposed accuracy rating). Some have excitedly proposed that Crystal is one step towards the “potential for getting email communication right on a massive scale” – as though all we need to do is uncover the right formula now that we have access to all of this data. The hunt to discover facts from enormous data sets is on!

Algorithmic Errors

Big data comes with many losses, one of which is contextual information. When cookies store information about your browsing habits and that information is used to create targeted advertisements for you, are they always useful? Algorithms get it wrong. A recent CSCW keynote by Zeynep Tufekci drove home the message that we have spent so much energy trying to figure out how humans make mistakes but we are only just starting to scratch the surface in our understanding of the types of errors that result from algorithmic decision making.

So one question becomes:

When Crystal gets it wrong, is there a pattern related to the types of people who are misread?

Since some commentators have found Crystal to be eerily accurate, other questions are:

Who is the software designed for? Who does it get right?

I would imagine that Crystal more accurately predicts the personalities of people who put a lot of their lives online and are deliberately very forthcoming, open, and genuine. Some people rely heavily on developing a genuine online persona because their business depends on it. Others participate online in completely different ways. Some people use a great deal of sarcasm that could be technically difficult to interpret. Context gets lost in big data. The many reasons why some people are hard to pin down through algorithmic processes gets lost. If levels of participation are a key factor in accuracy ratings, how do users interpret people who Crystal finds difficult to pin down? Are they poor communicators?

Some people do not participate in social media for reasons that are not very visible in our society. Reasons that, frankly, we do not speak about enough. For some, it is not because they don’t feel the social pressure and it is not because they wouldn’t enjoy being part of friend networks and receiving every update from a baby bump to a local protest. Instead, they opt-out because they have no other option based on their specific privacy needs. Victims/survivors of sexual violence are one group of people with very particular needs but there are many more.

So if Crystal or a similar service becomes widespread – and certainly the drive towards millions of users and exorbitant financial success is also baked into these technologies – what happens to the people who opt-out of ‘real name‘ online participation? What impression does it make to a Crystal user when the software cannot detect their target or the accuracy rating is low? This may not have implications for everyday users, but Crystal seems to be largely targeted towards business use. During the final round of a hiring process, when job applicants have already been stalked to some degree online, does Crystal swoop in and tip the scales in one direction or another? Consider this feature:

As always, there are many intended and unintended uses for technologies and Crystal is no exception. Stalking, dating, and marketing are just a few potential uses that people have pointed out so far. How Crystal output is interpreted by users is also up for debate. Until we know how well used Crystal (or a similar service) becomes, we won’t be able to assess how norms related to communication have begun to shift and, in turn, shape future iterations of this technology.

Accountability and Advocacy

People participate online to different degrees and for a wide range of purposes. Are Crystal’s algorithms attuned to these nuances? We don’t know because they are proprietary. Any assessment of bias is difficult and perhaps only possible through reverse engineering and the recent turn towards algorithmic accountability. Social values and assumptions are baked into technologies, often in ways that are initially imperceptible to designers. The consequences of these biases and the errors and misjudgments that result are fully capable of being insignificant for some and harmful for others.

When we examine the capabilities of new technologies like Crystal we should also consider how we can develop and advocate for technical patches that offer us more control over how our data is being used. For example, given that Crystal relies on social media platforms for a great deal of its data, we can imagine a patch that would give platform users the capacity to determine whether apps that people in their social networks use can have access to their data.

Now … how do we go about advocating for it?